Over the last few years, my focus at work has migrated away from Intune and device management to software engineering and the management of cloud native technologies.

This, to the surprise of no one, has been a steep learning curve. One of the big challenges I’ve faced is how to test and develop applications locally in an environment that mimics the production environments that the software will eventually run in.

Over the course of the next few posts, I will be diving into how to set up a local Kubernetes cluster using Docker Desktop, connecting it to Azure using Azure Arc, and then seeing what cool things that “hybrid environment” can do for us.

In this post, let’s start by setting up Kubernetes locally and connecting it to Azure using Azure Arc.

What is Azure Arc?

Azure Arc, put simply, is a service that allows you to manage services and resources that are running on-premises or from other cloud providers centrally from a single pane of glass in Azure. This means that you can use Azure’s management tools, security, and governance features to manage resources that are not running in Azure. The best of both worlds!

What this realistically means for us is that we can leverage Azure Arc to manage our local Kubernetes cluster from Azure, without having to worry about the costs associated with running a full Kubernetes cluster in Azure, which is great for testing and development. Not only do we get the central management features of Azure, but because our cluster now has a connection into our Azure subscription, we can now access all other Azure services from our local cluster.

Sounds cool, how much is this going to cost me?

This might be the best part of this whole setup - connecting a Kubernetes cluster to Azure using Azure Arc is completely free! Any Azure services that you use within your cluster will be billed as normal, but getting the cluster connected will cost us nothing, which is great for testing and development.

Official documentation for “Azure Arc-enabled Kubernetes” can be found here.

Getting started - Pre-requisites.

Before we can get started, we need to make sure that we have a few things in place:

Software:

- Install Docker Desktop - Download here

- kubectl - The Kubernetes command-line tool. This will allow us to interact with our cluster.

- Azure CLI - The Azure CLI tool. Optional (Because we can do everything from PowerShell), but it’s a great tool to have on your workstation.

PowerShell Modules:

Install the required PowerShell modules by running the following command:

$modules = @("Az.Accounts", "Az.Resources", "Az.ConnectedKubernetes")

$modules | ForEach-Object { Install-Module -Name $_ -Force -AllowClobber -Scope CurrentUser }

Provider Registration

You will also need to register some azure providers in your tenant.

Register-AzResourceProvider -ProviderNamespace Microsoft.Kubernetes

Register-AzResourceProvider -ProviderNamespace Microsoft.KubernetesConfiguration

Register-AzResourceProvider -ProviderNamespace Microsoft.ExtendedLocation

You can monitor the progress of the registration by running the following commands (the registration process can take some time):

Get-AzResourceProvider -ProviderNamespace Microsoft.Kubernetes

Get-AzResourceProvider -ProviderNamespace Microsoft.KubernetesConfiguration

Get-AzResourceProvider -ProviderNamespace Microsoft.ExtendedLocation

Setting up a local Kubernetes cluster with Docker Desktop

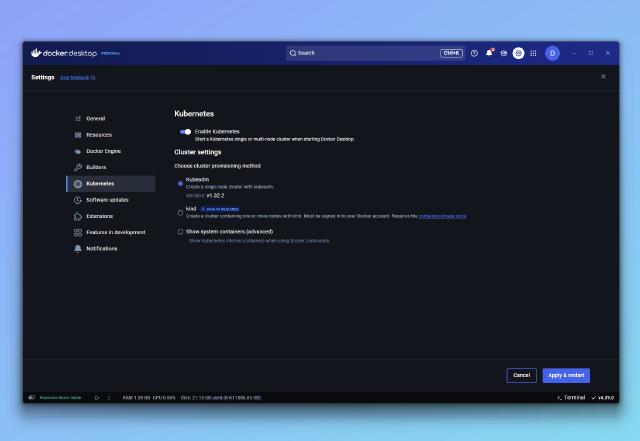

Once you have the above pre-reqs installed, open up Docker Desktop and navigate to the Kubernetes tab in the settings. Simply toggle the Enable Kubernetes option, select Apply & Restart, and Docker Desktop will take care of the rest.

It’s worth noting that this is not the only way to set up a local Kubernetes cluster, but it is a great way to begin experimenting with Kubernetes if you’re new to it.

Docker Desktop will now begin to download, install and configure all neccessary components to run a Kubernetes cluster on your local machine. This process is fully automated, but it will take some time. Keep an eye on the status bar at the bottom of the Docker Desktop window to see how the installation is progressing. Once completed, you should see green text in the status bar advising that the Kubernetes cluster is running.

We can now confirm that the cluster is up and running by opening a Terminal window and running the following command:

kubectl get nodes

If everything has been set up correctly, we should see a single node displayed in the terminal with the name “Docker Desktop”.

Connecting the cluster to Azure using Azure Arc

Now that we have our local Kubernetes cluster up and running, let’s connect it to Azure.

First, we need to connect to Azure and create a resource group to store the resources that we will create.

$tenantId = 'myTenantId'

$subscriptionId = 'mySubscriptionId'

$resourceGroupName = 'myResourceGroup'

$location = 'eastus'

Connect-AzAccount -Tenant $tenantId -Subscription $subscriptionId

New-AzResourceGroup -Name $resourceGroupName -Location $location

Now that we have our resource group created, we can begin the process of connecting our cluster to Azure.

$k8sParams = @{

ClusterName = 'docker-desktop'

ResourceGroupName = $resourceGroupName

SubscriptionId = $subscriptionId

location = $location

}

New-AzConnectedKubernetes @k8sParams -Verbose

I like adding -Verbose to the end of the New-AzConnectedKubernetes command as it gives me a bit more information about what is happening behind the scenes, as this task can take some time to complete.

Once the command has completed, we should now be able to see the Cluster in the Azure Portal in the resource group we created. We can also verify that we can see the connected cluster by running the following command:

Get-AzConnectedKubernetes -CusterName 'docker-desktop' -ResourceGroupName $resourceGroupName

What now?

Now that we have our local Kubernetes cluster connected to Azure, we should probably try and see what we can do with it, right? Hop over to your Azure Portal and navigate to the resource group - you should find your connected cluster there. If we go into that resource and try and view any of the cluster resources, we are going to be asked to sign in to view the local resources…

“Wait,” I hear you say, “I thought we were connecting this to Azure so we could manage it from Azure?”

That’s correct - we have now connected our cluster to Azure, but to access resources inside the cluster, we still need to configure a way to remotely authenticate to it. There’s a few ways to do this, to keep things simple and “Azure Native”, let’s set up our account with a cluster-admin role in the cluster.

$entraAccountUpn = (Get-AzContext).Account

Kubectl create clusterrolebinding cluster-admin-binding --clusterrole cluster-admin --user=$entraAccountUpn

Note: We are assigning these roles to our Entra account for demonstration purposes. In a real-world scenario, it would be better to assign these roles to an Entra security group, and then add users to that group as needed. More options for authentication is available in the Official Documentation.

Now that we have linked our account to the cluster, we can go back to the Azure portal and refresh the page. We should now be able to list and manage all cluster resources natively!

Remote access

Of course, accessing and managing a locally hosted cluster from Azure is just the beginning. Is your cluster hosted on a remote device? your home-lab? If you leverage the Azure CLI “connectedk8s” extension, you can now manage your cluster from anywhere without having to save credentials or certificates on your local machine!

To achieve this, open up a terminal window and run the following command:

az connectedk8s proxy --name $clusterName --resource-group $resourceGroupName

now in another terminal window, you can run any kubectl command as if you were running it locally!

Conclusion

In this post, we have set up a local Kubernetes cluster using Docker Desktop, connected it to Azure using Azure Arc, and then configured our account to manage the cluster from Azure. This is a great way to test and develop applications locally in an environment that mimics the production environments in which the software will eventually run.

In the next few posts, I’m going to dive into setting up workload identity authentication (passwordless access to Azure resources from a locally running cluster??!!!) as well as exploring what else we can do now that we have our “hybrid” environment set up.